In the current state-of-the-art machine learning based real-time control of large complex networks such as electric power systems is largely bottlenecked by the curse of dimensionality. Even the simplest control designs demand numerical complexity to accomplish. The problem becomes even more challenging when the network model is unknown, due to which an additional learning time needs to be accommodated.

This project investigates new reinforcement learning (RL) approaches for cyber-physical autonomy to bridge the gap between current intelligent systems and human-level intelligence. The nature of many cyber-physical systems (CPS) is distributed, heterogeneous, and high-dimensional, making the hand-coded functions and task-specific information hard to design in the learning scheme. Large amount of training data is often required for achieving the desired performance, however this limits the generalization to other tasks.

Unmanned Aircraft Systems (UASs), or drones, have tremendous scientific, military, and civilian potential for data collection, monitoring, and interacting with the environment. These activities require high levels of reasoning, perception, and control, and the flexibility to adapt to changing environments. However, like other automated agents, UAS don't possess the ability to refocus their attention or reallocate resources to adapt to new scenarios and adjust performance.

Human-driven vehicles (HDVs) and automated vehicles (AVs) of all levels (Level 1-5, AVs1-5) may share the highways in the long and foreseeable future. The increasing vehicle autonomy heterogeneity and diversity may jeopardize the safe and harmonious interaction among such vehicles with mixed autonomy on highways and pose a threat to the safety of all vehicles. This may exacerbate an already growing and alarming national concern on traffic safety.

Artificial Intelligence (AI) has shown superior performance in enhancing driving safety in advanced driver-assistance systems (ADAS). State-of-the-art deep neural networks (DNNs) achieve high accuracy at the expense of increased model complexity, which raises the computation burden of onboard processing units of vehicles for ADAS inference tasks. The primary goal of this project is to develop innovative collaborative AI inference strategies with the emerging edge computing paradigm.

This NSF CIVIC grant will provide a sustainable, scalable, and transferable proof of concept for addressing the spatial mismatch between housing affordability and jobs in US cities by co-creating a Community Hub for Smart Mobility (CHSM) in vulnerable neighborhoods with civic partners. The spatial mismatch between housing affordability and jobs causes commuter traffic congestion resulting in an annual $29 billion loss to the U.S. economy alone.

This project investigates new reinforcement learning (RL) approaches for cyber-physical autonomy to bridge the gap between current intelligent systems and human-level intelligence. The nature of many cyber-physical systems (CPS) is distributed, heterogeneous, and high-dimensional, making the hand-coded functions and task-specific information hard to design in the learning scheme. Large amount of training data is often required for achieving the desired performance, however this limits the generalization to other tasks.

Emerging technologies in communications and vehicle technologies will allow future autonomous vehicles to be platooned together with wireless communications (cyber-connected) or physically forming an actual train (physically-connected). When physically connected, vehicles may dock to and undock from each other en-route when vehicles are still moving.

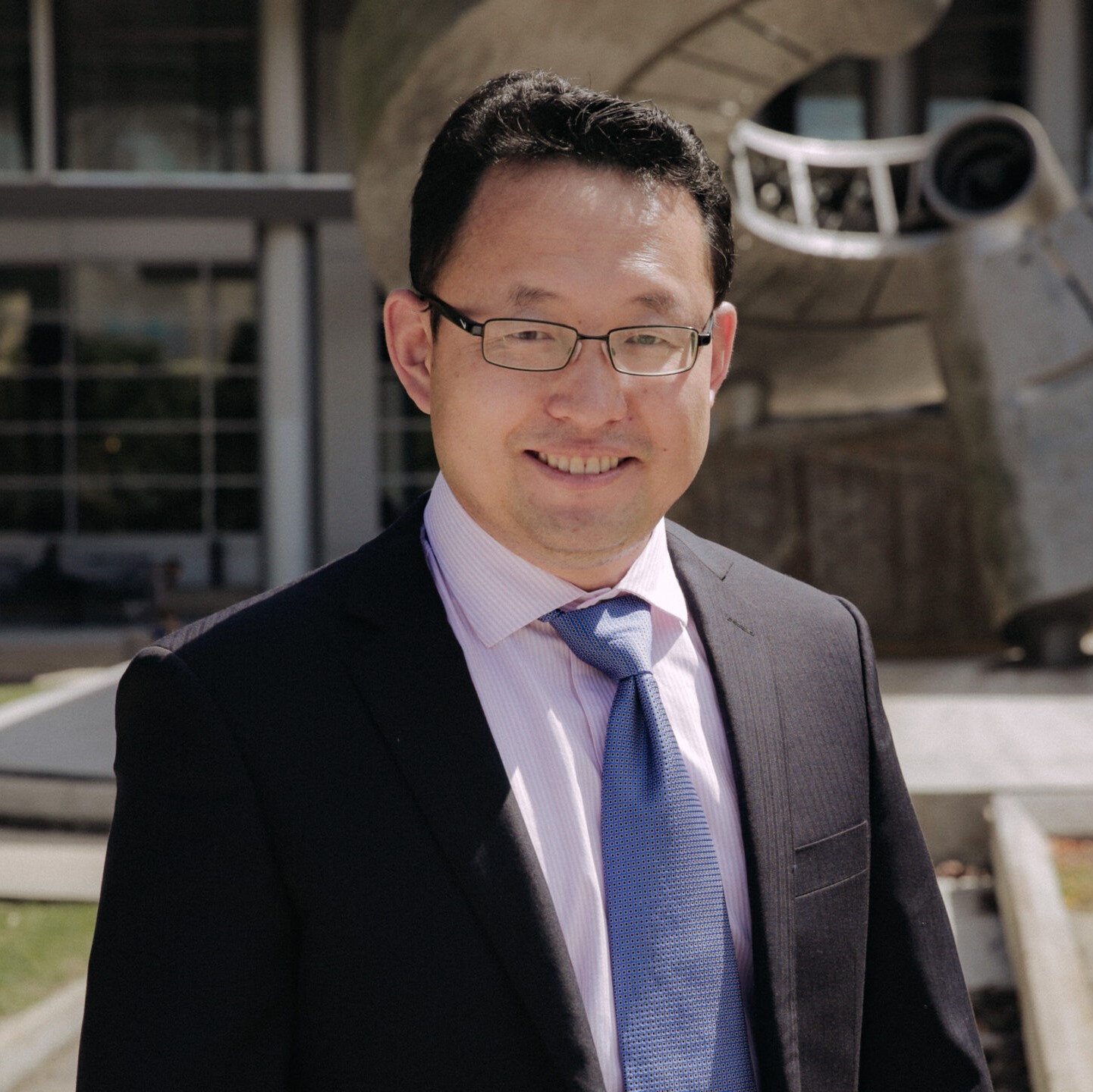

Dr. Xiaopeng (Shaw) Li is currently a Professor in the Department of Civil and Environmental Engineering at the University of Wisconsin-Madison (UW-Madison). He served as the director of National Institute for Congestion Reduction (NICR) before. He is a recipient of a National Science Foundation (NSF) CAREER award. He has served as the PI or a co-PI for a number of federal, state, and industry grants, with a total budget of around $30 million. He has published over 110 peer-reviewed journal papers. His major research interests include automated vehicle traffic control and connected & interdependent infrastructure systems. ). He received a B.S. degree (2006) in civil engineering from Tsinghua University, China, an M.S. degree (2007), and a Ph.D. (2011) degree in civil engineering along with an M.S. degree (2010) in applied mathematics from the University of Illinois at Urban-Champaign, USA.

This Cyber-Physical Systems (CPS) grant will focus on the development of an urban traffic management system, which is driven by public needs for improved safety, mobility, and reliability within metropolitan areas. Future cities will be radically transformed by the Internet of Things (IoT), which will provide ubiquitous connectivity between physical infrastructure, mobile assets, humans, and control systems.